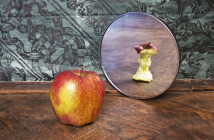

Earlier this year, Microsoft made headlines when it debuted Tay, a new chatbot modeled to speak like a teenage girl, which rather dramatically turned into “

” of its release, as The Telegraph put it. The Twitter bot was built to “learn” by parroting the words and phrases from the other Twitter users that interacted with it, and – because, you know, Twitter – those users quickly realized that they could teach Tay to say some really horrible things. Tay soon began responding with increasingly incendiary commentary, denying the Holocaust and linking feminism to cancer, for starters.

Curated from hackeducation.com

We didn’t like Microsoft’s Clippy and we like asking others for help. Bots aren’t new, as this article explains, but can they deliver something useful around performance and learning? That remains to be seen.